One of the most fundamental tools in the toolbox for data developers is loading a data file from a staging, or landing, location into a database. From there the possibilities are endless to transform the data through code to fit your needs. For a lot of developers with on-premises solutions, this takes the form of an SFTP location or a local file share. But with the rapid growth of cloud-based solutions, the tools and methods are changing. I’ve been working a lot with Snowflake lately, so let’s dive into staging data for Snowflake.

Snowflake Stage Options

Snowflake supports a two different options for staging data files; internal and external stages. Internal stages are similar to an SFTP location where you can push (PUT) a file to the target location and then access it from Snowflake as if it were sitting on the “server” for your database. An external stage is a file share hosted on a 3rd party cloud provider, such an Amazon S3 bucket. Snowflake supports external stages hosted on Amazon AWS, Google Cloud Platform or Microsoft Azure.

In this post we’ll create an internal stage, and in a future post, we’ll create an external Amazon S3 stage with an open source data set. Additionally, we’ll assume you have at least a trial account with Snowflake. If you don’t have a Snowflake account, you can set up a free trial account in just a few minutes.

Create An Internal Stage

Before we can put a file into our stage, we have to create it within our Snowflake instance. Creating an internal stage can be done with just a few lines of code. In the code samples below, we will setup the demo environment which will include the the following:

- ST_DEMO_ROLE user role

- ST_DEMO_WH warehouse

- ST_DEMO_DB database

- ST_INTERNAL_STAGES schema

- ST_DEMO_STAGE internal stage

Create New User Role

Creating a role isn’t a necessity in a trial account because you could simply run all this code as the ACCOUNTADMIN role and have hardly any issues with permissions; however, that’s not how things are in the “real world”. In order to simulate real-world conditions and follow recommended best practices, we’ll setup a new user role.

Snowflake best practices recommend managing users and roles with the SECURITYADMIN role which is inherited by the ACCOUNTADMIN role, but can also be granted to specific users who will manage security. Additionally, we’ll grant the new role to the SYSADMIN role so that any objects created in the new role will be inherited by SYSADMIN. Snowflake recommends doing so as best practice since SYSADMIN role cannot be removed, and will remain in place should a user account or user role be removed from the Snowflake account.

In a new SQL Worksheet change to the SECURITYADMIN role, create the new role, GRANT the new role to the current user and the SYSADMIN role.

USE ROLE SECURITYADMIN;

CREATE OR REPLACE ROLE ST_DEMO_ROLE;

SET current_user = CURRENT_USER();

GRANT ROLE ST_DEMO_ROLE TO USER IDENTIFIER($current_user);

GRANT ROLE ST_DEMO_ROLE TO ROLE SYSADMIN;The code block above also shows an example of creating a Snowflake SQL variable and then using the IDENTIFIER function to grant the role to the current user. This isn’t necessary as we could simply type out the specific username to grant the role to, but by using the variable, this block of code is reusable for any user.

Create ST_DEMO_XS_WH Warehouse

A Virtual Warehouse, or “warehouse”, is a cluster of compute resources in Snowflake. A warehouse is needed for tasks such as running queries, performing DML tasks or loading data. The warehouse provides resources like CPU, memory and temporary storage needed for these tasks. Warehouses can scale up, meaning “more power”, or scale out, meaning “more nodes”. In our demo, we’ll use an extra small (XS) warehouse with a single cluster. Creating a stage and loading a file doesn’t need much “horsepower” and a smaller warehouse will consumer fewer credits.

In the code block below, we’ll create a new warehouse, set a few properties like size, number of clusters and the auto suspend time in seconds. Auto Suspend shuts down the warehouse after is has been idle for the set number of seconds in order to conserve credits as well. Auto Resume will restart the warehouse automatically from a suspended state if a query or action in Snowflake requires a warehouse. Additionally, we will need to transfer ownership on the warehouse our newly created role in order to use the warehouse for queries later. Note, this is not necessarily best security practice, but simplifies some of the permissions for this demo.

USE ROLE ACCOUNTADMIN;

CREATE OR REPLACE WAREHOUSE ST_DEMO_XS_WH

WAREHOUSE_SIZE = XSMALL

MIN_CLUSTER_COUNT = 1

MAX_CLUSTER_COUNT = 1

AUTO_SUSPEND = 600

AUTO_RESUME = TRUE

;

GRANT USAGE ON WAREHOUSE ST_DEMO_XS_WH TO ROLE ST_DEMO_ROLE;

Create ST_DEMO_DB Database

Next, we’ll create a new database that will house our stage and also transfer ownership of the database to the new ST_DEMO_ROLE role. By transferring ownership we will grant all rights on to the users in the ST_DEMO_ROLE such as creating schemas, tables and other objects. This keeps things simple for demo purposes; however, is not necessarily recommended best practice. Database ownership should be assigned in accordance with your individual or company security policies.

USE ROLE ACCOUNTADMIN;

CREATE OR REPLACE DATABASE ST_DEMO_DB;

GRANT OWNERSHIP ON DATABASE ST_DEMO_DB TO ROLE ST_DEMO_ROLE;Create Schema and Stage Objects

Next, we’ll create a new schema and stage object in that schema in the new ST_DEMO_DB. Schemas provide a method of organizing your objects into logical groups as needed for your requirements. Schemas also provide a way to apply security policies as needed. For example, perhaps only developers can access certain schemas, while admins or development managers can access all schemas.

To create the schema and stage, first we’ll switch to the ST_DEMO_ROLE role and ST_DEMO_DB database. Next create the schema object and finally create the internal stage. Additionally, we’ll enable the directory view on the stage. Enabling directory opens up a number of additional options when working with stages. We will be using the ability to enumerate the files in the stage for this demo in a later step.

USE ROLE ST_DEMO_ROLE;

USE DATABASE ST_DEMO_DB;

CREATE OR REPLACE SCHEMA ST_INTERNAL_STAGES;

CREATE OR REPLACE STAGE ST_INTERNAL_STAGES.ST_DEMO_STAGE

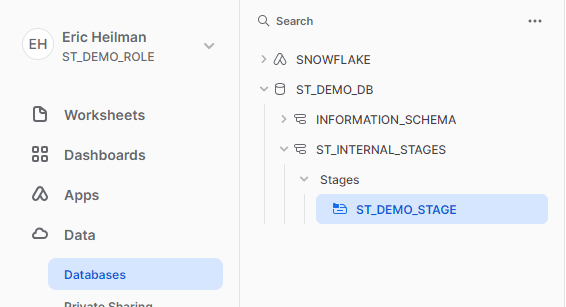

DIRECTORY = (ENABLE=TRUE);Now that all the objects have been created, in the Snowsight UI, switch to the ST_DEMO_ROLE, click on Data | Databases and your object tree should look similar to what’s shown below.

Upload a File to Snowflake with Snowsight

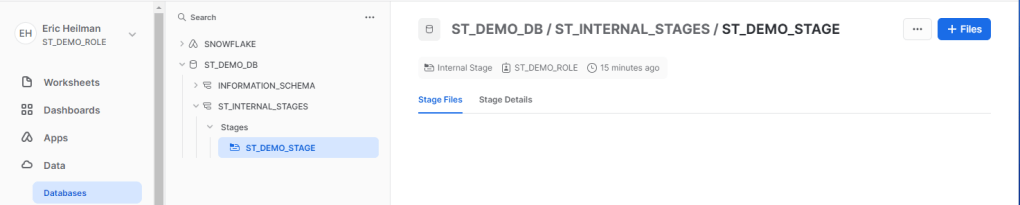

Now that we’ve created a place to land our file, we can upload a file using the Snowsight. Currently, files are limited to 50mb from Snowsight. To upload file file, click on the ST_DEMO_STAGE in the object tree and then click on the +Files button in the upper right corner.

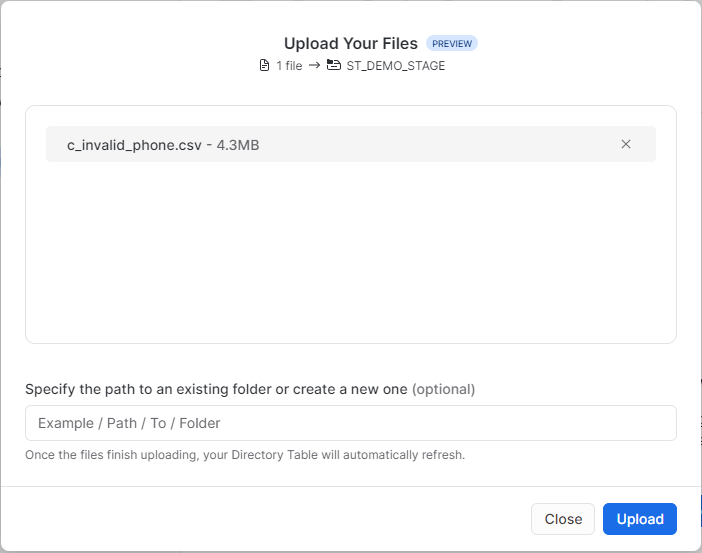

Next, drag or browse to the file you wish to upload to the stage, and click upload. Below we’re uploading a .csv file of invalid phone numbers from a calling campaign.

Once the file is uploaded, we can see the file listed in the stage view since we enabled the directory option when creating the stage. Snowflake may warn you that a warehouse is needed to view the files, if so, choose the demo warehouse ST_DEMO_XS_WH.

From here the file can be copied into a Snowflake table, removed, renamed, etc. But for now, we’re simply focusing on staging the file for use later.

Staging a File to Internal Stages with SnowSQL

SnowSQL is a stand alone command line interface for connecting to Snowflake and executing SQL queries and other commands. Files can also be loaded to an internal stage with a PUT command using SnowSQL without the 50mb limitations. Installation and configuration details for SnowSQL can be found in the Snowflake Documentation. I’m not going to cover SnowSQL in detail here as covering all the options for install and configuration could be its own post, I only mention it here as an additional option for staging files to Snowflake.

Conclusion

In this post we covered the basics of getting started with Snowflake data loading and stepping through the process of creating a stage and uploading a file. Over the next few posts, we’ll cover creating an external stage with an open source Amazon S3 bucket and using Python with Snowpark to post a file to an internal stage.

4 thoughts on “Snowflake: Getting Started with Stages”